Language Acquisition

A project done with Arjan Nusselder, Carsten van Weelden and Andreas van Cranenburgh during the second year of the AI undergraduate program at the UvA in June 2007. Supervised by Remko Scha.

Abstract

How do humans learn language? According to the usage based model of construction grammar, language is inductively learned. Linguistic input can be interpreted by making analogies with previously seen language. However, how does one start to interpret linguistic input before one has experiences to compare it to?

Introduction

Human language differs from animal communication in several ways. Language is symbolic– it can refer to anything in the world, observable or unobservable, thereby allowing hypothetical situations. Language is grammatical– it assigns individual meanings to words, but also new meanings to particular series of words. Language is acquired during individual development– its form depends greatly on the individual’s surroundings, and is certainly not uniform for everyone in the world.

It is unknown when language originated, and why it is not observable yet in other species, or why it is so inherently local. Its origin is a classic continuity paradox: the human way of communicating with symbols may have been a trigger for the evolution of language, or the onset of language may have enabled symbolic communication.

Either way, each individual’s acquisition of language portrays its own miniature evolution. Every human learns the linguistic behavior of the community it is raised in. Therefore, every human needs to be flexible enough at birth to be able to deal with any of the variants of language that exist on earth.

A language begins with words. A child might learn the meaning of a word by hearing the word while observing its semantic context in reality, and thus be able to map a word to a particular situation. Unfortunately this immediately runs us into a frame problem. Even if the child manages to discern only one word, how will it know which part of reality the word would map to?

Even while children are still trying to extract meaning from adult utterances, they can already start learning syntactical structure. Learning the first syntactic constructions does not differ much from learning the first words– a child is left to observe events while trying to discern the speaker’s communicative intention. Every language has its own grammatical conventions which all need to be learnable.

One could even argue that children do not necessarily segment language exactly at word boundaries, and that the smallest constituents of language are not single words or morphemes, but small grammatical constructions.

Regardless, as the child is learning its first words and grammatical constructions, it makes many mistakes. How are these corrected? Does it remember all of the situations it has seen before? How does it learn word order? In short, how is language represented in the mind of a child?

Background

There is no consensus on how language is stored in the mind. There have been neurobiological advances in understanding where language is processed and produced in the brain, but there is still disagreement on how the language is processed and produced. The largest players in the discussion are the generative grammarians (Chomsky, Pinker) and the construction grammarians (Kay, Chang, Fillmore, Tomasello). This project was done with its foundation in the work of construction grammarians, specifically adhering to the stages of language acquisition defined by de Kreek.

Experiment

The arguments between the two opposing views on language remain mainly theoretical. To be able to determine whether either view is a possible answer to how language is stored and acquired, we would need actual evidence. If one could make a computational model which could acquire language in the same way the generative or construction grammarians postulate, then that would make that view a plausible one.

Here we present a prototype of an implementation for a computational model of language acquisition according to the views of construction grammar. If the computational model is successful, it would be able to learn syntactical constructions and semantics without using an innate grammar. Most importantly, it would be able to acquire the first corpus that a child would later use to find meanings for new linguistic input.

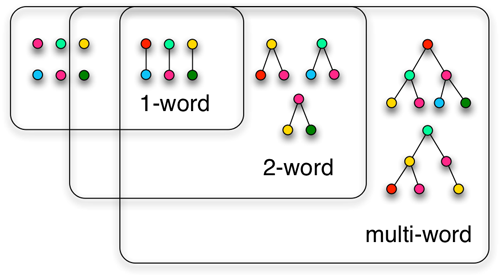

Can we make a computational model for the acquisition of a child’s first corpus? We have attempted to make a model of performance based language acquisition through to two word constructions. We would like the model to be able to generate new two word constructions based on arbitrary situational input frames.